Mac users can find Kernel Config file in the following path: vi /usr/local/share/jupyter/kernels/toree/kernel.json (Similarly on other OS you can search for this file) This file looks like: 1: Here you have to edit SPARK_HOME to your Spark Home and we are done.

#INSTALL APACHE SPARK FOR JUPYTER INSTALL#

Install Apache Spark from here (I have tested and verified v1.4 is working with Spark Kernel) 3.2 Point Apache Toree (Spark Kernel) to SPARK_HOME. 3.1 You can skip this part if you already have Spark installed. Apache Toree (Spark Kernel) needs SPARK_HOME which means you should have Spark Installed (standalone or cluster). For Apache Spark, We have Apache Toree which is a Spark Kernel (We will get into detail of Spark Kernel in some other article).Apache Toree gives us the ability to run Spark Instance in Jupyter Notebook.Ģ: jupyter toree install 3. Jupyter has a concept of kernel.By default,Jupyter provides Python Kernel.

#INSTALL APACHE SPARK FOR JUPYTER DRIVER#

In addition to the containers, we need to set up permissions on the cluster and ensure that the executors that your Spark driver will launch have a way to talk to the driver in the. We also need to make a container of Spark for the executors. I expect you have python (and pip) installed on your system (I have used OS X for the development pupose but these steps will be similar on other linux machines).Ģ. The first step, similar to dask-kubernetes, is building a container with Jupyter and Spark installed.

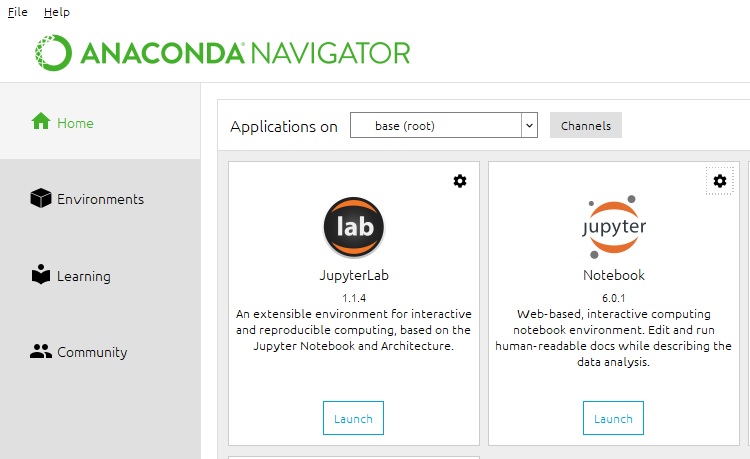

Today I will explain how you can get yourself a running instance of Jupyter Notebook which can run Apache Spark. Installing and Exploring Spark 2.0 with Jupyter Notebook and Anaconda Python in your laptop 1-Objective 2-Installing Anaconda Python 3-Checking Python Install 4-Installing Spark 5-Checking Spark Install 6-Launching Jupyter Notebook with PySpark 2.0.2 7-Exploring PySpark 2.0.2 a.Spark Session b.Rea. Jupyter and Zeppelin are the two most popular NoteBooks available today. Hopefully, this works for you (as it did for me), but if not use this as a guide. "NoteBook" is a great way for the tutorial, demo and presentation of any code.Gone are the days where people used PowerPoint in their code demos. The steps outlined below will install: Apache Cassandra Apache Spark Apache Cassandra - Apache Spark Connector PySpark Jupyter Notebooks Cassandra Python Driver Note: With any set of install instructions it will not work in all cases. There is a lot of buzz of Apache Spark these days, and I expect if you are coming to this article then you know what is Apache Spark.

0 kommentar(er)

0 kommentar(er)